ChatGPT has become the modern-day diary, a digital confessional where we ask the questions we’re too afraid to voice out loud. It’s our unbiased therapist, our life coach, our secret-keeper. We trust it with our most unfiltered doubts, assuming that digital void is a perfectly safe space.

But that safe space is only as secure as a phone’s lock screen. The innocent act of borrowing a partner’s phone can turn into an accidental deep dive into their most private thoughts. One unsuspecting woman got to learn this the hard way when she least expected it.

More info: Reddit

A partner’s phone can hold their deepest, most private thoughts, and sometimes, you stumble upon them by accident

Image credits: Solen Feyissa / Unsplash (not the actual photo)

While borrowing her girlfriend’s phone, a woman saw a recent and deeply unsettling ChatGPT conversation

Image credits: Getty Images / Unsplash (not the actual photo)

Her girlfriend asked AI if she should end their relationship, but also how to find someone more compatible

Image credits: Freepik / Freepik (not the actual photo)

The discovery left her feeling ‘anxious and insecure,’ but she couldn’t confront her girlfriend without admitting she saw it

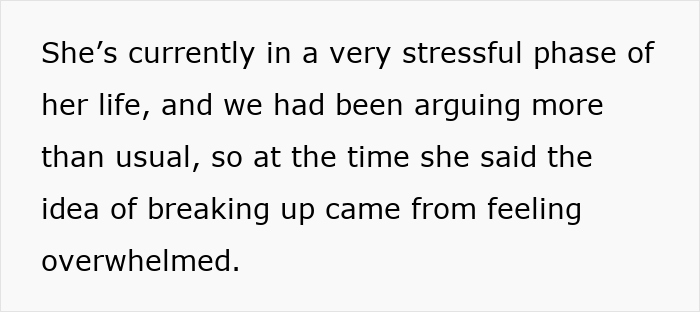

Image credits: Outrageous-Yak-3733

She was left wondering if her girlfriend was just venting to an AI or actively planning to leave her

A woman just wanted to use ChatGPT, a harmless request that would soon detonate an explosion of insecurity in her relationship. Her girlfriend had recently suggested they break up during a stressful period but had quickly taken it back. The relationship had hit a rocky patch, but they’d smoothed things over and were back on track. Or so she thought.

She borrowed her partner’s phone for a quick (non-snooping) search and made an accidental and devastating discovery: a ChatGPT conversation from that same stressful week. Her girlfriend had asked AI about breakup advice, but also the far more chilling question of how to find someone more compatible. She was asking for an instruction manual to find a replacement partner.

For the OP, this was a modern-day hell. She couldn’t confront her girlfriend without admitting she saw the chat, a confession that would instantly shift the blame from the person planning an exit strategy to the one who accidentally discovered it. She was left to spiral in silence, her anxiety growing with every passing moment, unable to ask for the reassurance she desperately needed.

So she’s left with a heart-wrenching, 21st-century problem. Is her girlfriend just stress-testing their relationship in a digital void, or is she actively “halfway out the door,” just waiting for the right moment to leave? She’s now turning to the online community, asking how she can possibly navigate this mess without revealing the source of her sudden, all-consuming doubt.

Image credits: dimaberlin / Freepik (not the actual photo)

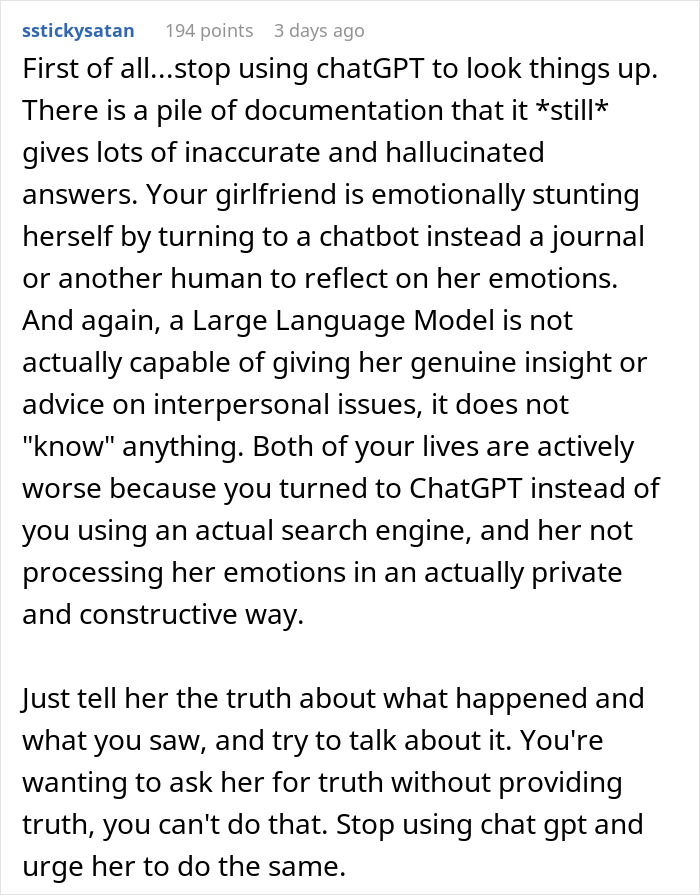

A recent survey from Sentio found that nearly one in four people have used chatbots for mental health support. People often turn to AI because it offers an anonymous, judgment-free space to explore difficult feelings without the immediate risk of a real-life conflict. Her conversation with ChatGPT was likely a misguided attempt to process her relationship doubts in what she perceived as a private, safe environment.

However, just because it’s common doesn’t mean it’s a good idea. Consumer technology experts at CNET pointed out that AI chatbots are not a substitute for real human connection or professional therapy. Relationship specialists also note that the advice they generate is often generic and lacks the empathy and nuanced understanding of a real person.

The AI can’t grasp the complexities of a relationship; it can only process data and offer a list of logical, but often emotionally tone-deaf, steps. The most alarming part isn’t that she used ChatGPT, but what she asked it. The question, “how to find someone more compatible,” goes beyond simple venting or exploring doubts.

It is proactive, solution-oriented language that suggests, as the OP fears, she was “mentally exploring a future without [her].” This could just be a sign of stress, but it is also an indication that she has emotionally disengaged to some degree and was using the AI as a tool to research an exit strategy, a massive breach of relational trust.

How would you have approached this AI love triangle? Share some advice in the comments!

The internet warned her about the dangers of AI and also agreed that the girlfriend is allowed to have a space to vent a little